As someone working in a creative field, I’ve never been concerned about a computer taking my job. I always felt confident that the tasks required of me as a photo editor for New York Magazine are too complex and messy — too human — for an artificial intelligence to perform. That is, until DALL-E 2, a sophisticated AI that generates original artwork based only on text input, opened to public beta last June.

It’s easy to lose hours on the r/dalle2 subreddit, where beta testers have been posting their work. More often than not, the only way to differentiate a DALL-E creation from a human-generated image is five colorful squares tucked in the bottom right corner of each composition — DALL-E’s signature. As I scrolled through images of Super Mario getting his citizenship at Ellis Island and Mona Lisa painting a portrait of da Vinci I couldn’t shake the question that town criers and elevator operators of yore must have confronted: Was my obsolescence on the horizon?

DALL-E, named after surrealist artist Salvador Dali and Pixar’s lovable garbage robot WALL-E, was released by San Francisco-based research lab OpenAI in January 2021. The first iteration felt like a curious novelty, but nothing more. The compositions were only remarkable because they were generated by AI. In contrast, DALL-E 2, which launched in January 2022, is lightyears ahead in image complexity and natural semantics; it’s easily one of the most advanced image generators in development, and it’s evolving at an astonishing speed. Last week, OpenAI launched a new Outpainting feature, which allows users to extend their canvas beyond its original borders, revealing, for example, the cluttered kitchen surrounding Johannes Vermeer’s Girl With a Pearl Earring.

Like other forms of artificial intelligence, DALL-E stirs up deep existential and ethical questions about imagery, art, and reality. Who is the artist behind these creations: DALL-E or its human user? What happens when fake photorealistic images are unleashed on a public that already struggles with deciphering fact from fiction? Will DALL-E ever be self-aware? How would we know?

These are all important ideas that I’m not interested in exploring. I just wanted to see if I need to add “robot takes my job” to the long list of things that make me anxious about the future. So I decided to put my AI competition to the test.

I have one of those ambiguous job titles that no one understands, like “marketing consultant” or “vice-president.” In the most basic terms, my job as photo editor is to find or produce the visual elements that accompany New York Magazine articles. The mechanics of how DALL-E and I do our work are pretty similar. We both receive textual “prompts” — DALL-E from its users, mine from editors. We then synthesize that information to produce visuals that are (hopefully) compelling and accurate to the ideas in play. My toolkit includes a corporate Getty subscription, countless hours of Photoshop experience, and an art degree that cost me an offensive amount of money. DALL-E’s toolkit is the millions of visual data points that it’s been trained on, and the algorithms that allow it to link those concepts together to create images.

For our competition I set simple rules. If DALL-E was able to produce an image that was pretty close to my original artwork without too much hand holding, the AI won the round. If it needed my stylistic guidance or wasn’t able to produce something satisfying at all, I’d award myself (and humanity) a point.

Challenge No. 1: People Problems

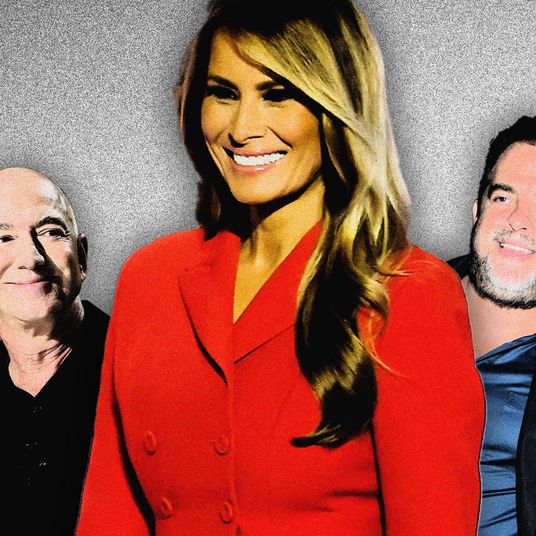

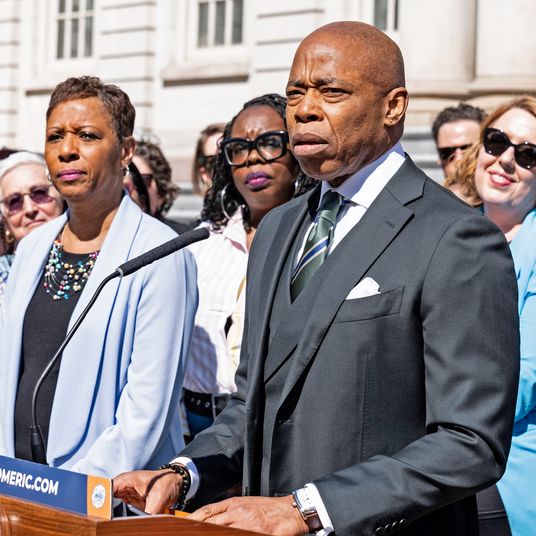

The folks at OpenAI are keenly aware of their tool’s potential for abuse, so they’ve put strict guardrails on what DALL-E can generate. DALL-E 2 cannot work with photos of real people (including public figures), while I, on the other hand, have complete freedom to make masterpieces like this:

Or this:

Let’s not forget this gem:

SCORE

Humanity: 1

DALL-E: 0

Challenge No. 2: Make Illustration Great Again

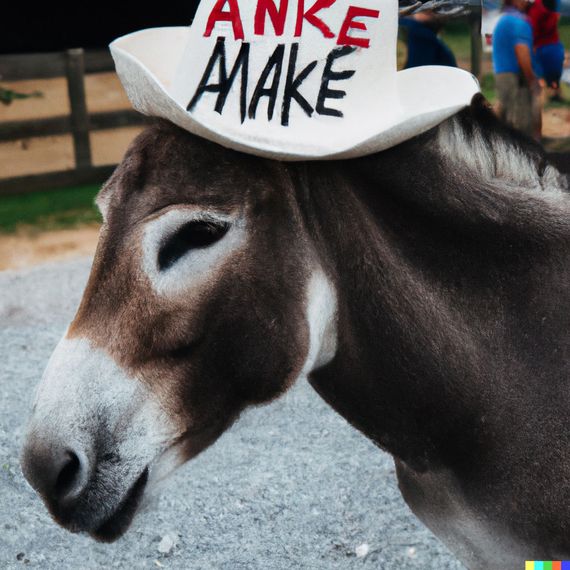

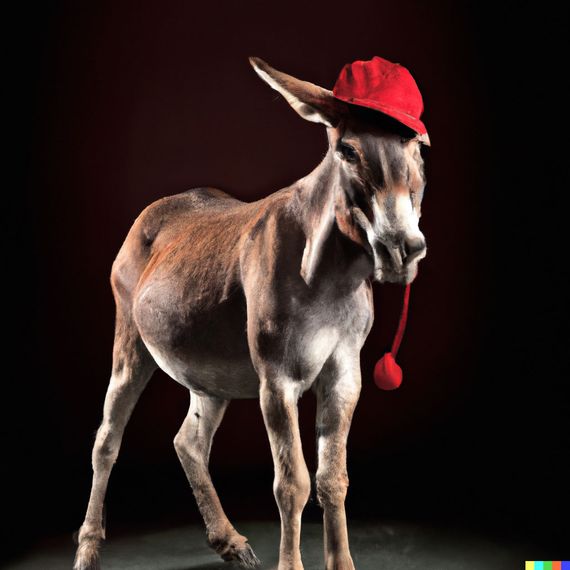

Maybe DALL-E would fare better with conceptual photo illustrations. Starting with something simple, I gave it the exact prompt I was given: “A donkey, wearing a Make America Great Again hat.” Easy enough; I had put this image together pretty quickly:

DALL-E is not the first to struggle with the concept of MAGA:

DALL-E 2 was trained on 3.5 billion parameters of captioned images, and it’s designed to respond to natural language prompts — like you’re talking to a human, not a computer. “It’s kind of like teaching a child about concepts through flashcards.” Joanne Jang, product manager at OpenAI told me. If you show DALL-E enough photos captioned “Giraffe” and “yoga” it will begin to understand them as concepts. When a user asks DALL-E to make an image that doesn’t exist, like a Giraffe doing yoga, it’s able to take what it knows about “Giraffe” and “yoga” and generate an image.

Part of the problem appeared to be that DALL-E knew nothing about Donald Trump’s campaign slogan. But “a donkey wearing a red baseball hat” did not yield better results:

I gave it a few more stylistic inputs, but wasn’t ultimately too excited by the final product:

Without guidance the donkeys DALL-E generated ranged from boring to unsettling.

SCORE

Humanity: 2

DALL-E: 0

Challenge No. 3: Visual Thinking

It takes DALL-E about one minute to give you four image options. From there, you can create additional variations of your favorite, and make edits for things that aren’t working. As a tool, it’s incredibly easy to use — just type and wait. Extracting a satisfying image from DALL-E, on the other hand, is no simple task. Without giving it any stylistic guidelines (“digital art” or “surrealist” or “sci-fi” for example) the images DALL-E generates tend to be reminiscent of tragically terrible stock photography.

Plus, the language that makes a good headline does not necessarily translate to a good image. The person driving DALL-E still needs to be thinking visually. This became painfully clear when I asked DALL-E to visualize the housing crisis. When a writer requested “a photo that signals the problem of rent just going through the roof everywhere” I came up with this:

Now, DALL-E’s turn:

Not great. DALL-E clearly struggles with non-visual language. But when I got descriptive with the program, I was surprised by how close the results were to the original illustration, with no stylistic guidance:

SCORE

Humanity: 2

DALL-E: 1

Challenge No. 4: Self-Conceptual Art

For the finalé I thought I’d dive into a subject DALL-E may have the advantage: NFTs. What better to dissect the digital art world than a purely digital artist? For the piece “How Museums Are Trying to Figure Out What NFT Art Is Worth” I came up with this illustration.

At this point I’d figured out that the more vague you were with DALL-E, the more puzzling the results were going to be — at least to the human eye. It couldn’t make the leap from a headline to an artistic concept, it could only try to piece together a visual from the words provided. Dropping in the prompts “Art world grapples with NFTs” and “Museums Are Trying to Figure Out What NFT Art Is Worth” produced confusing results.

DALL-E clearly needed more guidance, so I described the scene: Two art handlers wearing white gloves moving a glitched Starry Night. At this point I had spent a few hours researching the best syntax and language to use with DALL-E. Adding “digital art, cyberpunk” to my prompt immediately elevated what the program generated, well beyond what I could have imagined on my own.

SCORE

Humanity: 3

DALL-E: 1

The Final Verdict: Let’s Call It a Draw

DALL-E never gave me a satisfying image on the first try — there was always a workshopping process. It takes time and research to learn the language of DALL-E. Inputting “oil painting” will give you a specific look, as will referencing certain aesthetic genres like Cyberpunk or Impressionism. You can even reference the styles of well known artists from history by adding their name to the prompt. In that regard DALL-E is not so different from human creatives — the key to getting a satisfying image from both is to know the best way to ask.

As I refined my techniques, the process began to feel shockingly collaborative; I was working with DALL-E rather than using it. DALL-E would show me its work, and I’d adjust my prompt until I was satisfied. No matter how specific I was with my language, I was often surprised by the results — DALL-E had final say in what it generated.

OpenAI views DALL-E as a sketching tool to augment and extend human creativity, not replace it. As compelling as what DALL-E generated was, it couldn’t have gotten to these visuals without my guidance and knowledge. Coaxing out a satisfying image from the program requires creativity, and the knowledge of how to describe those ideas in an executable way. Still, AI technology is evolving at a rapid pace, and it’s hard to shake the feeling that we are on the precipice of a major paradigm shift in how we all do our jobs. The creativity of DALL-E is limited by the imagination of the user behind it, so I won’t lose sleep over my AI replacement — for now.